We have all spent many years deploying Maximo into a server and WebSphere-based architecture, so it is a known quantity for us. While they present their own complexities, we are well experienced with running different databases, using WebLogic, performing upgrades, and interfacing with external systems.

The Maximo we know…

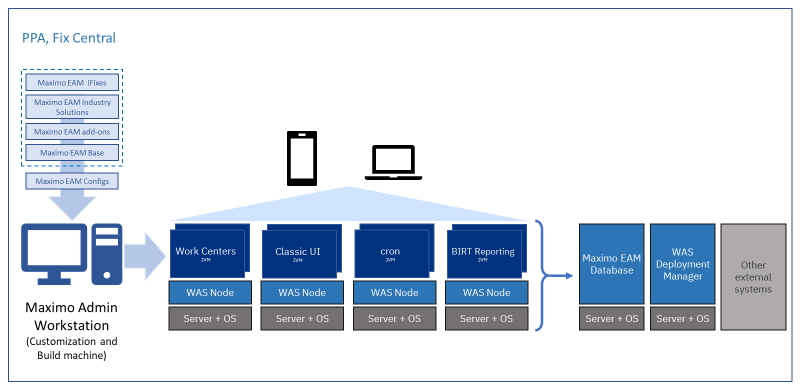

With the traditional Maximo deployment, all the needed pieces are loaded to a single computer… the Administration workstation. From this computer the EAR and WAR files are generated, then deployed to the application server(s). It is very possible that each workload (Work Centers, UI, Cron, Reporting) is running on a physical server, or a VM as a node under WebSphere. Similarly, the underlying database and other external systems may be running on their own servers as well. In the end, Users access Maximo via a URL in their browser or mobile app/device. Administration is performed via the familiar MAXADMIN and WASADMIN user profiles.

Graphic courtesy of IBM.

Moving on to MAS…

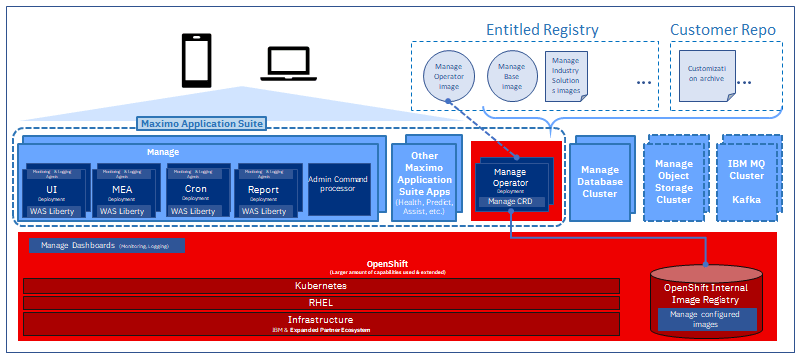

With Maximo Application Suite (MAS) on OpenShift, things are quite different. RedHat OpenShift (let’s call it the operating system) may be running anywhere. Perhaps on-prem on its own servers or VMs, or perhaps hosted for you out in the cloud. A main point of OpenShift is that the physical infrastructure it is running on does not matter.

OpenShift provides all the needed monitoring, dashboards, and logging tools that staff needs to administrate the environment. In the market there are many Open-Source tools available to assist if there is further need for administration functions.

Given the OpenShift environment is operating, the next step is to retrieve the Images for Manage, Add-Ons, etc. from the Entitlement Registry based upon the clients licensing situation. These images are the collection of the application classes, XML, scripts, etc. that make up the elements of MAS.

As a side note: Any client customizations are also collected in the Customization Archive, to be used by the deployment process to bring those aspects into the MAS deployment. If you are coming from a “customized,” Maximo environment, the analysis of the degree and location of customizations is critical. Depending upon the degree of documentation about your customizations over the years, this may be a time-consuming up-front task. In the end you want the custom functions your Users require to be operational in the new MAS environment. (Or it is an opportunity to pass judgement on them and perhaps take a different route!)

Graphic courtesy of IBM.

Part of OpenShift is an Operator dedicated to Manage. This function retrieves the necessary images from the Entitlement Registry and ultimately performs the deployment, similar in function to the former Maximo Installer. This Operator creates the configured Images and places them into the OpenShift Internal Registry for future use.

From the graphic above, you can see that the underlying Manage database can be in a cluster on its own, whether in the same OpenShift environment or not. It is technically possible that the database for Manage is hosted elsewhere, even on-premises, while Manage is hosted out in the cloud. There could be bandwidth/response time issues with this arrangement, but it is architecturally a valid scheme.

The Manage Object Storage Cluster is where attached documents are stored. Much like having an external file system for these files, this optional cluster could reside almost anywhere. Other object storage mechanisms for attachments and Doclinks are also supported by Manage 8.x such as S3, NFS, EFS, etc.

Missing in the graphic above is another supporting database… MongoDB. This database is inherent to any MAS instance and is where MAS-level configurations are stored. Since Users are initially configured in MAS, then synched to elements like Manage, this arrangement maintains a separation between MAS and its various elements. While seamless to the MAS Administrator, it is worth knowing about. It is also worth mentioning that now we have a MASADMIN user profile to perform MAS-level tasks. MAXADMIN is still used, but only down within Manage to perform tasks such as Security Group configs.

Deploying…

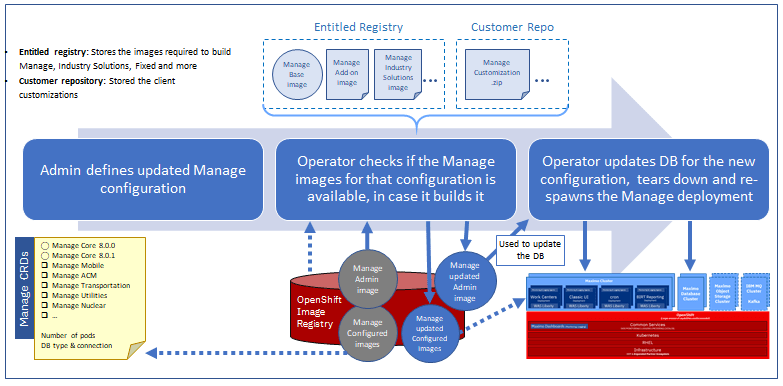

When the Operator is exercised, it gathers the images from the Entitlement Registry (Base, industry solutions, etc.), blends in anything from the Customization Archive, builds customized images of the Manage workloads the Administrator has specified, then places the images in the Internal Registry. Additionally, the Operator creates the containers from these workload images. These containers hold the typical workloads: All, UI, MEA, Cron, and Reports. It is worth noting the use of WAS Liberty within each container.

Graphic courtesy of IBM.

The final step for the Operator is to update the OpenShift configuration based upon the new containers, such as routing user traffic to the services these containers make up. While this is easy for a new deployment, if you are updating an existing environment, you need to ensure that Users are not impacted by downtime. Because this is dealt with by the internals of OpenShift, this downtime should be minimal.

The result is…

The graphic below is a typical arrangement, but every client is different. Depending on the size of the deployment, you could run multiple MAS instances in a single cluster. Each instance is its own image and operates a set of pods to deliver the applications/function to the User. (Think of a pod as a container, a Manage workload, WebSphere Liberty serving the Maximo UI/MEA/Cron/Reports/EAR, in this case. Each pod has its own status (active/inactive), configuration file (YAML format) and logs to show what it is doing.)

From a licensing standpoint any MAS instance can share the configured license pool. The caution here is that if the MAS license function is set to restrict User access based upon licenses available in the pool, you may run out if the instances are heavily used by the variety of User communities. AppPoint (license) sizing is an important step in any deployment. Certainly, you can add AppPoints at any time, but it is best practice to size correctly from the beginning to avoid User frustration and potential additional expenses.

Each MAS instance, whether in a shared cluster or not, has its own set of workloads (UI, MEA, etc.) as mentioned above. The capacity management of the containers for each MAS instance is managed by OpenShift (orchestrated is the official term). To manually manage the load requires a bit of Kubernetes understanding and experience. It will take you some time to fine tune the instances to suit your performance needs.

Finally, each cluster with its one or more instances can point to other clusters where the data is stored. These clusters could be “local,” or they could be very much elsewhere. The databases remain either db2, Oracle, or MSSQL.

Graphic courtesy of IBM.

Wrapping up…

Very little of these details are known by MAS User communities. Rather, they simply access the MAS elements via the URLs provided, Manage for example, and do their work. With a properly administrated OpenShift environment, deployments, upgrades, and even backup or recovery situations are never noticed. If you are going to adopt OpenShift in your in-house IT shop, the staff will need to develop the skills needed to support this architecture. If you are moving to a hosted environment, much of this will be below the waterline for you so you can focus on other aspects of your business.

Article by John Q. Todd, Sr. Business Consultant at TRM. Reach out to us at AskTRM@trmnet.com if you have any questions or would like to discuss deploying MAS 8 or Maximo AAM for condition based maintenance / monitoring.

0 Comments